In the ever-evolving landscape of microservices architecture, organizations have a sheer number of APIs corresponding to different services. As the number of services and APIs within a Kubernetes cluster grows, maintaining security, monitoring, and traffic routing becomes overwhelming. It becomes challenging for organizations deploying applications on Kubernetes to manage these APIs efficiently. However, with the emergence of Istio, an open source service mesh platform, organizations can manage APIs using the API gateways and handle such issues head-on.

In this blog post, we will explore how Istio empowers Kubernetes users to simplify and streamline API management using a gateway. It offers enhanced security and control over the entire service communication within a cluster. We will see how managing API traffic could be simplified using Istio Gateway with the help of some of the use cases like host-based routing, rate limiting, CORS, Pre-flight, etc. So, let’s get started.

What is an API Gateway?

An API gateway acts as an intermediary between client and backend services like a reverse proxy. It accepts the API request, processes it by combining the necessary services, and returns the appropriate result which helps with API management.

An API gateway behaves as a single point of entry into the system for microservices‑based applications. It is responsible for request routing, composition, and policy enforcement. Some of its other features include authentication, authorization, monitoring, load balancing, and response handling. It offloads the implementation of non-functional requirements to the infrastructure layer and helps developers to focus on core business logic, effectively speeding up the product releases.

Features of an API Gateway

API governance

API gateways provide a unified interface for managing and governing APIs across an organization. They offer capabilities such as API versioning, rate limiting, request throttling, and caching, ensuring consistent and controlled access to APIs.

Security and authentication

API gateways serve as a security layer, protecting backend services from unauthorized access and potential threats. They can handle authentication and authorization, enforce access control policies, and provide mechanisms like API key management, OAuth, or JWT verification.

Traffic management and load balancing

API gateways distribute incoming requests across multiple backend services to ensure load balancing and improve performance. They can perform intelligent routing based on factors like geographical location, service availability, or specific routing rules defined by the organization.

Service composition and aggregation

API gateways enable the composition of multiple backend services into a single API, simplifying the client-side integration process. They can aggregate data from multiple services, combine responses, or transform the data structure to meet the specific requirements of the client.

Though API Gateway as a concept has some key features that help offload major tasks from the application, choosing the right gateway solution and designing the API management gateway might not be easy. Let’s understand some of the challenges faced by the platform engineers while designing it on Kubernetes.

Challenges with API management on Kubernetes

Security and access control: Securing microservices and controlling access to their endpoints are critical aspects of modern application development. While Kubernetes provides a robust platform for container orchestration, integrating an API gateway into this environment can present security and access control challenges of API management.

Updating APIs: With continuous development, the APIs are updated and some may require testing on real traffic. On Kubernetes, achieving something like A/B testing or weighted routing could be challenging due to the lack of out-of-the-box solutions.

Scalable & consistent performance: APIs sometimes experience sudden increase or decrease in traffic. It would be challenging to control traffic to rate limit or blacklist abusive API consumers without a properly configured gateway.

Handling Cross-Origin Resource Sharing errors and Pre-flight: Implementing CORS and pre-flight for allowing only some cross-origin requests while rejecting others is not supported currently by many gateway solutions. Violation of CORS security rules can lead to browsers blocking the request or APIs receiving unwanted requests from browsers.

Seeing some of the challenges, we need a solution that has easy management and deployment of the services. Istio service mesh offers a gateway solution that can help address the above challenges.

What is Istio Gateway?

Istio Gateway acts as the entry point for external traffic into the service mesh, allowing fine-grained control over traffic routing and enabling advanced features such as load balancing, SSL/TLS termination, and access control.

In a microservice-based architecture, managing the complex network of services and handling traffic routing, security, and observability can be a tedious task. This is where Istio Gateway comes into play as a powerful tool in the Istio service mesh ecosystem.

When we talk about both in general, there are some overlaps between Istio Service Mesh and Istio Ingress Gateway as well as some unique capabilities.

Unique Capabilities

Service Mesh:

It has resilience mechanisms like timeouts, retries, circuit-breaking, etc.

Service clients can get more details regarding the topology of the architecture like client-side load balancing, service discovery, and request routing.

API Gateways:

It aggregates APIs, abstracts APIs, and exposes them.

It provides zero-trust security policies at the edge based on the user such as tight control over data in/out using Allowed Headers and Request.

It also helps to bring security trust domains using JWT or OAuth/SSO.

Let us understand the supporting components that help us shape API Gateway in the Istio ecosystem.

Istio virtual service

Istio Virtual Service is used to define the routing rules and policies for incoming traffic within the service mesh. It allows you to configure how requests are routed to specific services and endpoints based on various criteria such as HTTP headers, URL paths, and weights. A single virtual service is also used for sidecars inside the mesh. Virtual Services enable features like traffic splitting, traffic mirroring, load balancing, and fault injection.

Istio destination rule

The Istio Destination Rule complements the functionality of Virtual Service by defining the policies and settings specific to a particular service or version. It provides a way to configure the behavior of the traffic as it reaches the destination service. Destination Rules allow you to define traffic load balancing algorithms, connection pool settings, circuit breakers, TLS settings, and other service-specific configurations.

Istio ingress gateway

Istio Ingress Gateway is a component of Istio that allows you to expose services outside of the service mesh. It is a load balancer that sits at the edge of the mesh and receives incoming HTTP/TCP connections. It can be used to expose services to the public internet, or to other internal networks.

Request rewriting

Rewrite rules can be used to modify the contents of requests and responses. They can be used to change the URL, headers, or body of a request or response.

Security policies

Istio supports authentication and authorization policies, Mutual TLS (mTLS), and Traffic Control Policies that help to enable security in a mesh.

Together, all these components provide powerful capabilities for traffic management, routing, and control within the Istio service mesh. Let us now see how these Istio components help to manage API traffic.

Managing API traffic with Istio

Istio simplifies the implementation of tasks like A/B testing, canary rollouts, and staged rollouts with percentage-based traffic splits by making it easier to configure service-level properties like circuit breakers, timeouts, and retries at the gateway level.

Istio manages traffic using the Envoy proxies and it does not require making changes to the services. The proxies are deployed alongside each service. Envoy proxies all the data plane traffic and makes it easy to directly control traffic around the mesh.

Implementing traffic control mechanisms in Istio involves leveraging the various features provided by the service mesh to manage and control the flow of traffic within the microservices architecture.

Here are some key mechanisms that we can use in Istio:

Request routing

Istio allows us to define routing rules to direct requests to specific services or subsets of services. We can use match conditions based on HTTP headers, URL paths, or other criteria to determine the routing. This enables us to implement canary deployments, A/B testing, or gradual rollouts of new versions.

Traffic shifting and mirroring

With Istio, we can perform traffic shifting, which enables us to gradually move traffic from one version of a service to another. We can specify weights for different versions to control the percentage of traffic each version receives. Traffic mirroring allows us to duplicate traffic to a specific version or service for monitoring or testing purposes.

Rate limiting

We can apply rate-limiting policies to control the number of requests allowed per time interval. Istio supports both global and per-service rate limiting, enabling us to protect the services from traffic spikes or abuse.

Request timeouts

Istio provides mechanisms for configuring retries and timeouts to improve the robustness of the services. We can define how many times a request should be retried in case of failures and specify timeout durations to control how long a request should wait for a response.

Use cases (with examples)

First, we will deploy a sample application and the Istio API gateway as part of the base setup.

Pre-requisite

Kubernetes cluster

Kubectl with updated context

Istio demo profile setup on cluster

Make sure you have a cluster up with istio-base, istiod, and ingress-gateway. Now, let’s just set up a Gateway and sample application which is an echo server image using the GitHub repo of API Gateway.

Deploy sample application backend-v1 and backend-v2:

kubectl apply -f backend-v1.yaml

kubectl apply -f backend-v2.yaml

Let’s deploy Istio gateway.

kubectl apply -g gateway.yaml

With the setup ready, let us implement the features of Istio that help us with the API management on our cluster.

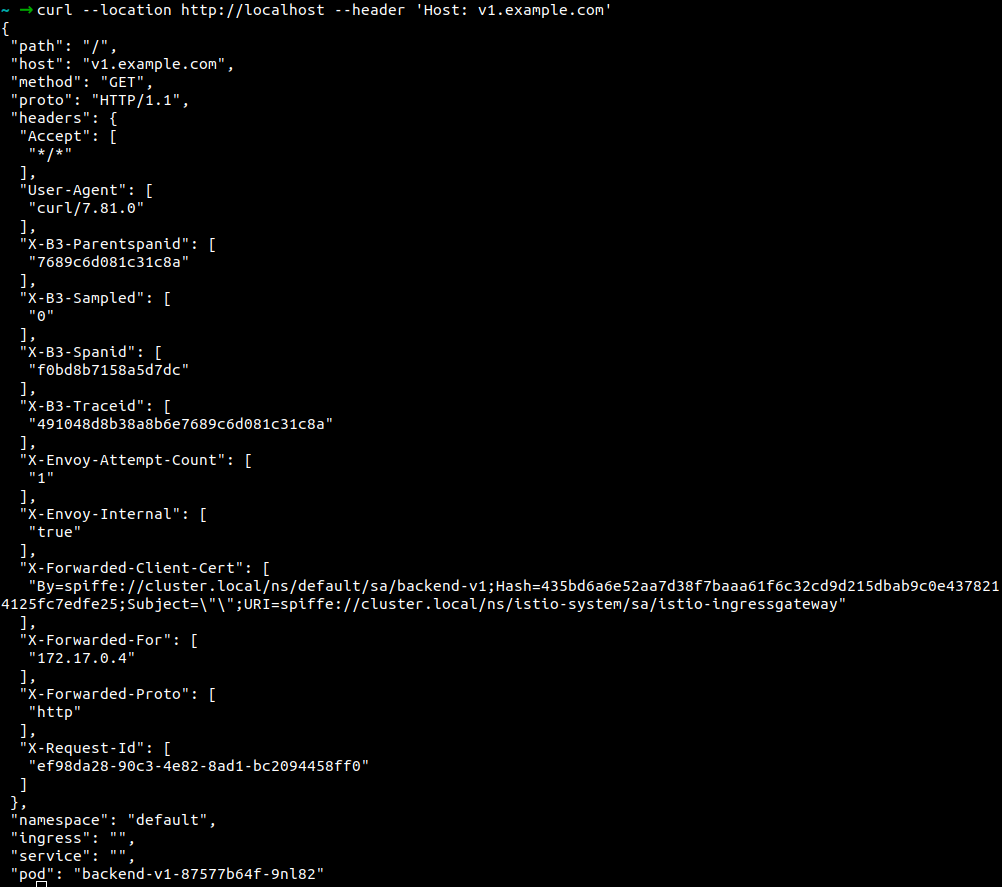

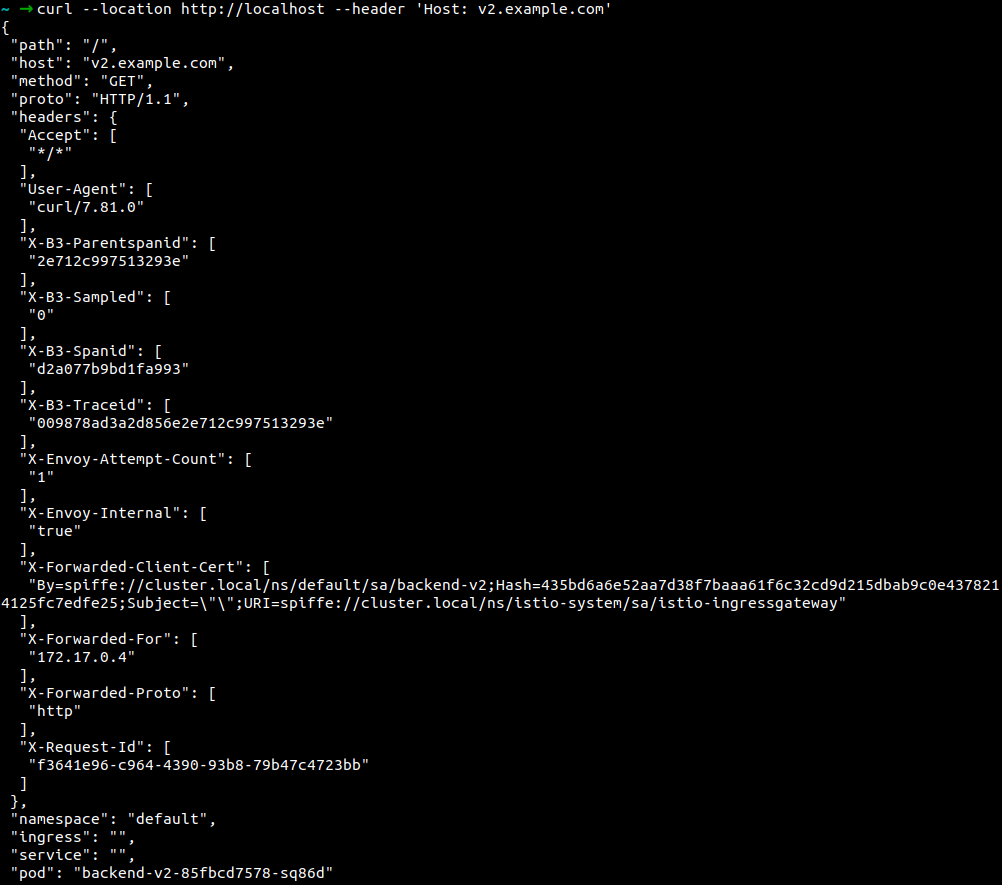

1. Host-Based routing

In the host configuration, we can see that the virtual service is configured to match “hosts” section.

apiVersion: networking.istio.io/v1alpha3

..

metadata:

name: v1-vs

spec:

hosts:

- "v1.example.com"

..

metadata:

name: v2-vs

spec:

hosts:

- "v2.example.com"

..

Verify via cURL:

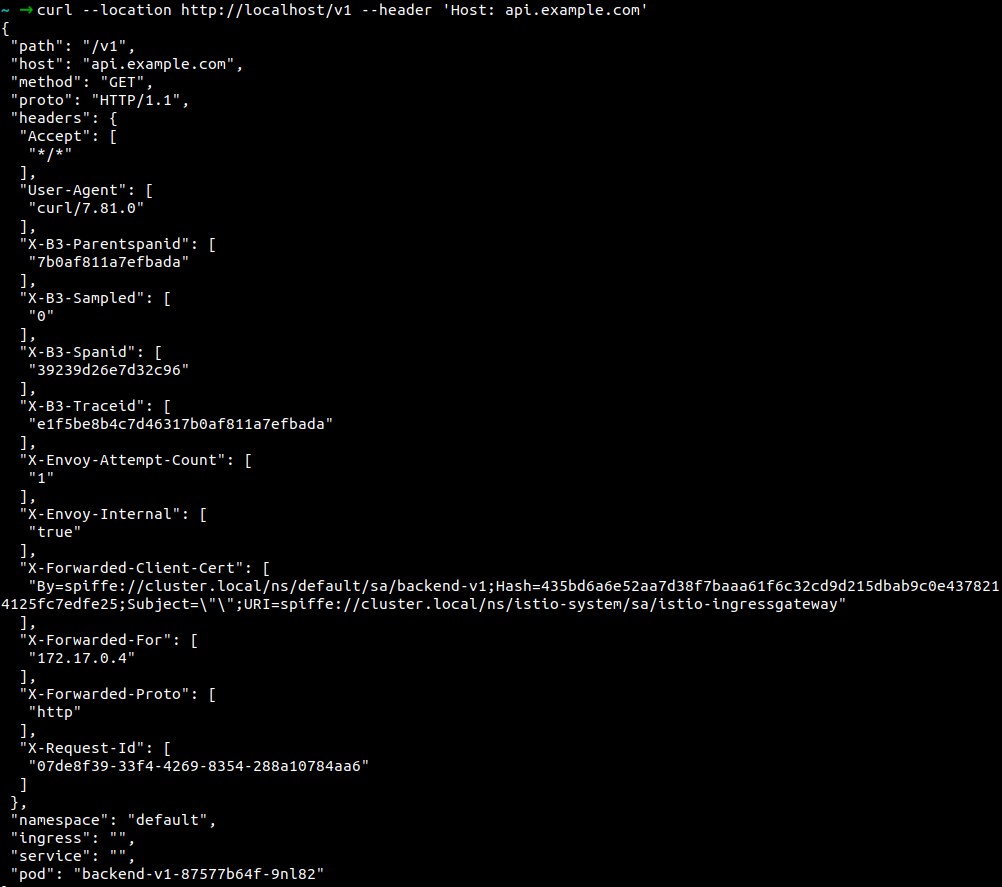

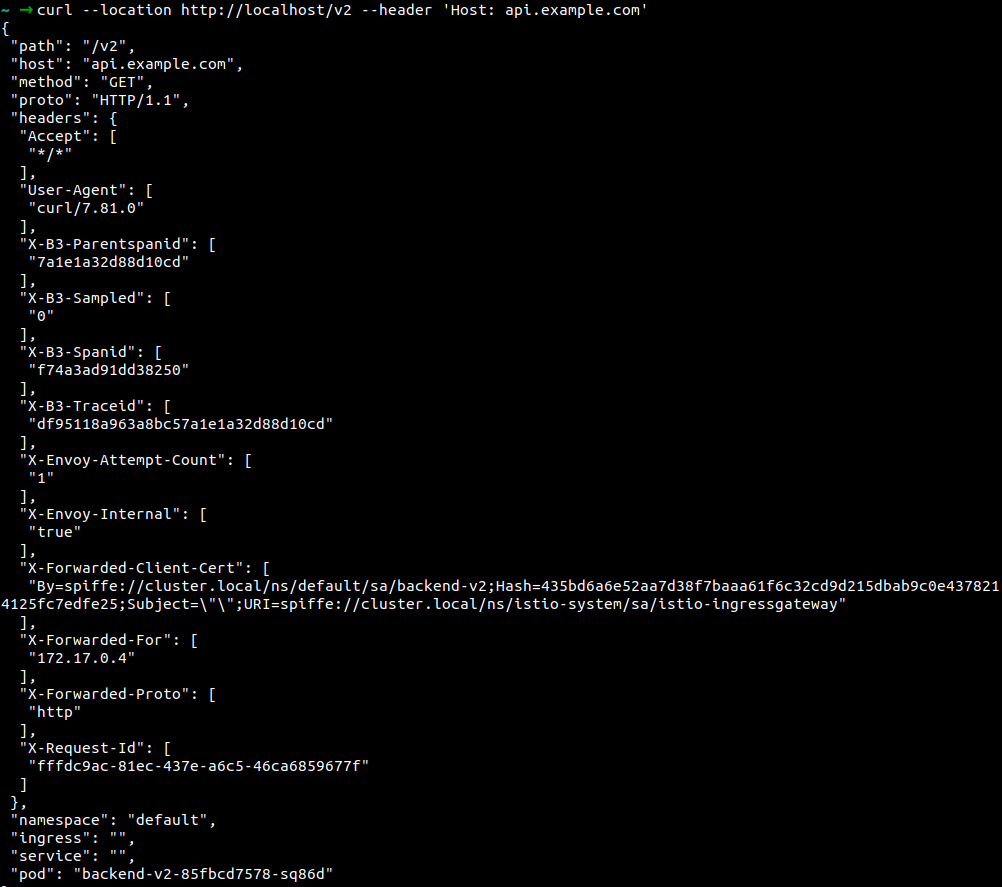

2. Path-Based routing

In the path configuration, we will match “uri” with “prefix” /v1 routes to backend-v1 and /v2 routes to backend-v2 application.

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: v1-vs

#

..

http:

- match:

- uri:

prefix: /v1

route:

- destination:

host: backend-v1

port:

number: 3000

---

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: v2-vs

#

..

http:

- match:

- uri:

prefix: /v2

route:

- destination:

host: backend-v2

port:

number: 3000

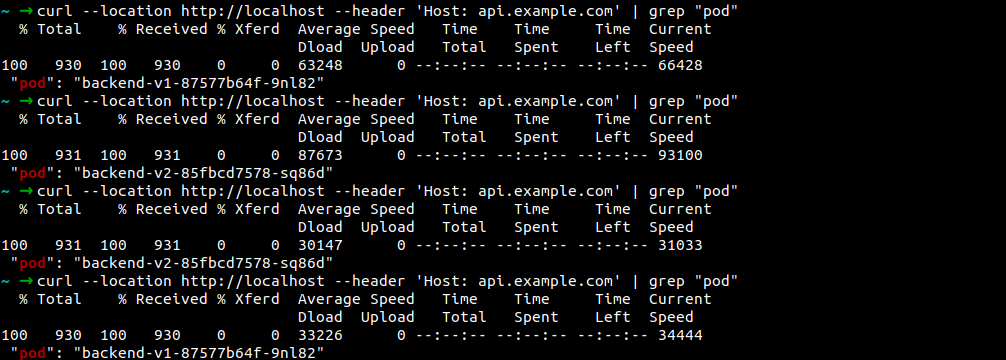

Verify via cURL:

The Location path is /v1

The location path is /v2

3. Rate limiting

Example of rate limit manifest which shows the number request rate limit on a specific microservice API /v1.

The rate limiter is to have a bucket with a capacity of 10 tokens (max_tokens), and a fill rate of 10 tokens (tokens_per_fill) every 1 minute (fill_interval). We’re also enabling the rate limiter (filter_enabled) for 100% of the requests and enforcing (filter_enforced) it for 100%.

Manifest file: rate-limit.yaml

apiVersion: networking.istio.io/v1alpha3

kind: EnvoyFilter

metadata:

name: v1-ratelimit

#

….

typed_config:

'@type': type.googleapis.com/udpa.type.v1.TypedStruct

type_url: type.googleapis.com/envoy.extensions.filters.http.local_ratelimit.v3.LocalRateLimit

value:

stat_prefix: http_local_rate_limiter

enable_x_ratelimit_headers: DRAFT_VERSION_03

token_bucket:

max_tokens: 10

tokens_per_fill: 10

fill_interval: 60s

filter_enabled:

runtime_key: local_rate_limit_enabled

default_value:

numerator: 100

denominator: HUNDRED

filter_enforced:

runtime_key: local_rate_limit_enforced

default_value:

numerator: 100

denominator: HUNDRED

response_headers_to_add:

- append_action: APPEND_IF_EXISTS_OR_ADD

header:

key: x-rate-limited

value: TOO_MANY_REQUESTS

status:

code: BadRequest

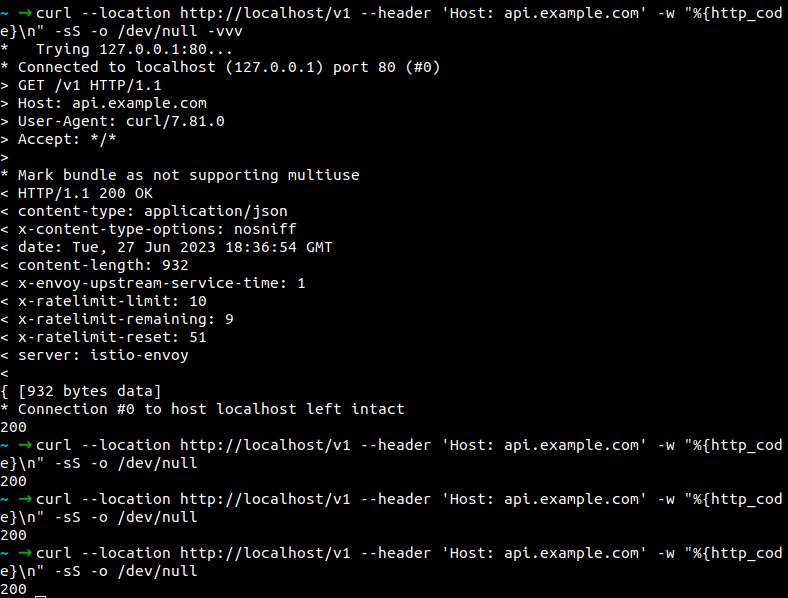

Verify via cURL:

The rate limit is 10 requests/minute. Currently, it is passing.

Now it has reached to 10 request/minute rate limit.

4. Weighted routing

In this example, weight manifest file shows defined 50-50 percent of requests to the /v1 and /v2 API. We used configuration “weight” to route the percentage of requests to the destination host.

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: v1v2-vs

….

http:

- route:

- destination:

host: backend-v1

weight: 50

- destination:

host: backend-v2

weight: 50

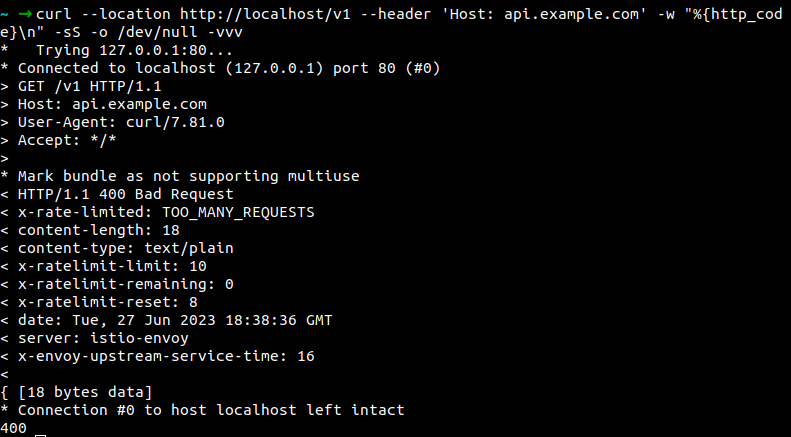

Verify via cURL:

Host api.example.com, 50-50 percent of requests routed to v1 and v2.

5. Authentication

This auth manifest shows how to configure JWT authentication, and with cURL, client can authenticates the request. We are using “RequestAuthentication” and “AuthorizationPolicy” to enable JWT authentication.

apiVersion: security.istio.io/v1

kind: RequestAuthentication

….

matchLabels:

app: backend-v1

jwtRules:

- issuer: "testing@secure.istio.io"

jwksUri: "https://raw.githubusercontent.com/istio/istio/release-1.18/security/tools/jwt/samples/jwks.json"

---

apiVersion: security.istio.io/v1

kind: AuthorizationPolicy

metadata:

….

matchLabels:

app: backend-v1

action: ALLOW

rules:

- from:

- source:

requestPrincipals: ["testing@secure.istio.io/testing@secure.istio.io"]

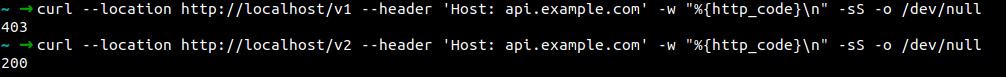

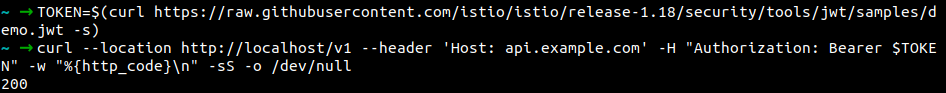

Verify via cURL:

Failed on /v1 without the JWT token. Success on /v2, because it is not enabled for auth.

With TOKEN, able to hit /v1.

6. CORS and Pre-flight

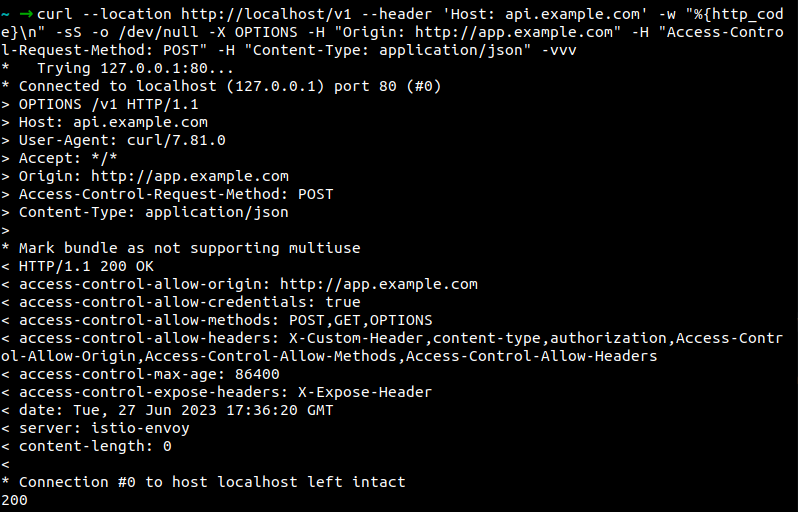

In this example, the cors manifest shows the configuration that can handle CORS and Pre-flight requests. We are using the cURL client as an end-user browser to send POST requests. We have defined CORS headers in the “corsPolicy” section.

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: v1-vs

….

corsPolicy:

allowOrigins:

- exact: "*"

allowMethods:

- POST

- GET

- OPTIONS

allowHeaders:

- X-Custom-Header

- content-type

- authorization

- Access-Control-Allow-Origin

- Access-Control-Allow-Methods

- Access-Control-Allow-Headers

exposeHeaders:

- X-Expose-Header

maxAge: 24h

allowCredentials: true

Verify via cURL:

Request for POST with OPTIONS preflight responded with CORS policy.

With the help of these use cases, we can control the traffic based on requirements and easily troubleshoot the API Gateway. Since all these changes are happening at the gateway level, it would not require making any changes to the application. With Kubernetes Gateway API, we may not be able to control API management to this level. Let us understand how Istio Gateway is different from the Kubernetes Gateway API so that it helps us decide when we actually need it.

Difference between Istio Gateway and Kubernetes Gateway API

Deployment: In Istio, a Gateway resource automatically configures an existing gateway Deployment and Service. In the Kubernetes Gateway API, the Gateway resource configures and deploys a gateway. We will need to manually link the Gateway resource to the Service.

Feature set: The Kubernetes Gateway API does not yet cover 100% of Istio’s feature set. For example, the Kubernetes Gateway API does not support Istio’s advanced routing features, such as weighted routing and fault injection which are needed to build a robust gateway.

In general, the Kubernetes Gateway API is a simpler and more lightweight solution than Istio Gateway. Istio Gateway offers a wider range of features and functionality which is much more suitable for large-scale production applications. Let us look at some of the advantages of using Istio Gateway.

Advantages of using Istio Gateway as an API gateway

Centralized control: Istio Gateway can be centrally managed and configured via a bunch of YAML manifests, which can make it easier to manage and troubleshoot your API gateway.

Flexibility: Istio Gateway is flexible and can be used to implement a wide range of API gateway features, such as load balancing, host, and path-based routing.

Performance: Istio Gateway is designed to be performant and can handle high volumes of traffic. It can also be easily integrated with public Cloud Services like AWS EKS, AKS, and GKE.

Security: Istio Gateway can be used to secure your APIs by using features such as mutual TLS, authentication, and authorization.

Load balancing: Istio Gateway can be used to load balance traffic across multiple instances of an API. This can help to improve the performance and availability of your APIs.

Routing: Istio Gateway can be used to route traffic to different versions of an API. This can be used to implement faster releases and testing.

Security: Istio Gateway can be used to secure your APIs by using features such as mutual TLS and authorization. This can help to protect your APIs from unauthorized access and malicious attacks.

Summary

Overall, Istio Gateway is a powerful and flexible tool that can be used to offload API gateway functionality. It is a good option for organizations that are looking for a centralized way to manage and secure their APIs. If you’re looking exploring API management solutions, our service mesh experts can help you understand and choose the best solution for your production deployments.

In this blog post, we have demystified API management and the challenges one faces while managing it. With the help of Istio Gateway and traffic management policies, we have seen how API management can be simplified using a sample application.

I hope you found this post informative and engaging. For more posts like this one, do subscribe to our newsletter for a weekly dose of cloud-native. I’d love to hear your thoughts on this post. Let’s connect and start a conversation on Twitter or LinkedIn.